Python vs. Node vs. PyPy

Tuesday, October 23, 2012 » performance

OpenStack has proven that you can build robust, scalable cloud services with Python. On the other hand, startups like Voxer are doing brilliant things with Node.js. That made me curious, so I took some time to learn Node, then ported a web-scale message bus project of mine from Python to JavaScript.

With Python and Node implementations in hand, I compared the two in terms of performance, community, developer productivity, etc. I also took the opportunity to test-drive PyPy; it seemed appropriate, given that both PyPy and V81 rely on JIT compilation to boost performance.

In this particular post, I’d like to share my discoveries concerning Python vs. Node performance.

Update: See my followup post on Python vs. Node, in which I share my results from a more formal, rigorous round of performance testing.

Testing Methodology

For each test, I executed 5,000 GETs with ApacheBench (ab). I ran each benchmark 3 times from localhost2 on a 4-core Rackspace Cloud Server3, retaining only the numbers for the best-performing iteration4.

All stacks were configured to spawn 4 worker processes, backed by a single, local mongod5 for storage. The DB was primed so that each GET resulted in a non-empty response body of about 420 bytes6.

~ Insert obligatory benchmark disclaimer here. ~

Teh Contenders

CPython (Sync Worker) Python v2.7 + Gunicorn7 v0.14.6 + Rawr8

CPython (Eventlet Worker) Python v2.7 + Gunicorn v0.14.6 + Eventlet v0.9.17 + Rawr

PyPy (Sync worker) PyPy 1.8 + Gunicorn v0.14.6 + Rawr

PyPy (Tornado Worker) Gunicorn v0.14.6 + Tornado9 + Rawr

Node v0.8.11 Node v0.8.11 + Cluster + Connect10 v2.6.0

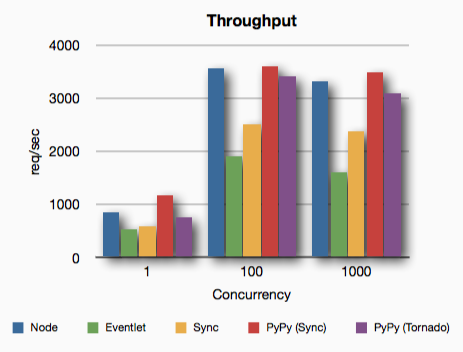

Throughput Benchmarks

In terms of throughput, Node.js is hard to beat. It’s almost twice as fast as CPython/Eventlet workers. Interestingly, Node.js spanks Eventlet even while serving a single request at a time. It’s obvious that Node’s async framework is far more efficient than Eventlet’s.

PyPy comes to the rescue, demonstrating the power of a good JIT compiler. The big surprise here is how well the PyPy/Sync workers perform. But there’s more to the story…

Not shown in the graph (below) are a few informal tests I ran to see what would happen at even higher levels of concurrency. At 5,000 concurrent requests, PyPy/Tornado and Node.js exhibited the best performance at ~4100 req/sec, followed closely by PyPy/Sync at ~3800 req/sec, then CPython/Sync at ~2200 req/sec. I couldn’t get CPython/Eventlet to finish all 5,000 requests without ab giving up due to socket errors.

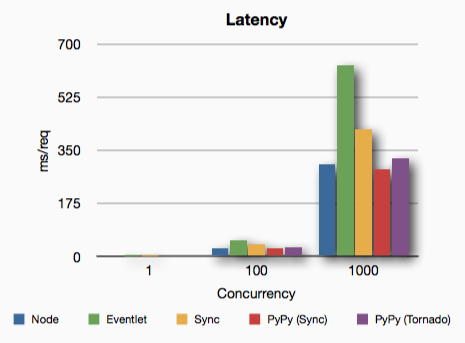

Latency Benchmarks

CPython/Eventlet demonstrates high per-request overhead, turning around requests about 30% slower (in the worst case) than non-evented CPython workers.

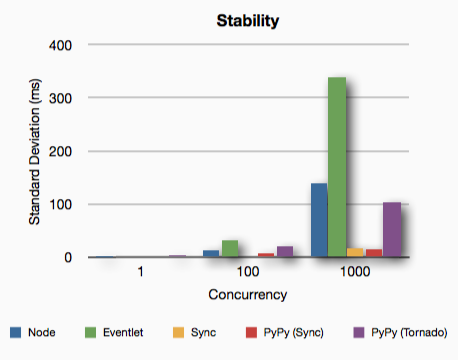

Stability Benchmarks

With a high number of concurrent connections, PyPy’s performance is very consistent. CPython/Sync also does well in this regard.

Node is less consistent than I expected, given its finely-tuned event loop. It’s possible that node-mongodb-native is to blame—but that’s pure conjecture on my part.

Conclusions

Node’s asynchronous subsystem incurs far less overhead than Eventlet, and is slightly faster than Tornado running under PyPy. However, it appears that for moderate numbers of concurrent requests, the overhead inherent in any async framework simply isn’t worthwhile11. I.e., async only makes sense in the context of serving several thousand concurrent requests per second.

CPython’s lackluster performance makes a strong case for migrating to PyPy for existing projects, and for considering Node.js as a viable alternative to Python for new projects. That being said, PyPy is not nearly as battle-tested as CPython; caveat emptor!

- 1 Node uses Google’s V8 JavaScript engine under the hood.

- 2 Running ab on the same box as the server avoids networking anomalies that could bias test results.

- 3 CentOs 5.5, 8 GB RAM, ORD region, first-generation cloud server platform.

- 4 What can I say? I’m an insufferable optimist.

- 5 A lot of FUD has gone around re MongoDB. I’m convinced that most bad experiences with Mongo are due to one or more of the following: (1) Developers consciously (or subconsciously) expecting MongoDB to act like an RDBMS, (2) the perpetuation of the myth that MongoDB is not durable, and (3) trying to run MongoDB on servers with slow disks and/or insufficient RAM.

- 6 The response body was chosen as an example of a typical JSON document that might be returned from the message bus service.

- 7 Gunicorn is a very fast WSGI worker proxy, similar to Node’s Cluster module. Gunicorn supports running various worker types, including basic synchronous workers, Eventlet workers, and Tornado workers.

- 8 Based on extensive benchmarking, I ended up writing a custom micro web-services framework. Protip: Don’t use webob for parsing requests; it’s dog-slow.

- 9 Used in lieu of Eventlet, since Eventlet was extremely slow under PyPy in my benchmarks.

- 10 Connect adds virtually no overhead; I wanted to use a framework that was fast, yet mainstream.

- 11 Warning: Don’t expose sync processes to the Internet; run behind a non-blocking server such as Nginx to guard against not only malicious attacks (ala Slowloris), but also against the Thundering Herd.